I might be writting more about my cloud journey and experience with DXP Cloud. Therefore, I dedicate this post about what DXP Cloud is and how it is build to provide more context.

# Background

At Neptune Software (opens new window) I work on DXP Cloud (opens new window). Neptune Software creates a Developer Experience Platform (DXP), which is a rapid application development platform through a no-code and low-code focus.

- DXP is Neptune's ecosystem, with components like the "SAP Edition" and "Open Edition" which make up the platform (Developer Experience Platform).

- DXP Cloud is Neptune's cloud platform with adjecent services that are part of the larger DXP ecosystem.

# DXP Cloud

Originally, the DXP software of Neptune is hosted on our customer's on-prem servers. As public cloud is becoming more mainsteam, it is only natural for us to build a cloud platform for our customers where they can use our services and run managed instances (PaaS model) of our software.

Enter, DXP Cloud. Neptune started working on it, back in 2020. As I've been involved from the beginning, it's been an exciting journey, building a cloud platform from scratch.

# High Level Design

Although we offer several services on DXP Cloud, at the time of writting the main service is the "Managed Instances" which is a Platform-As-A-Service, tailored to host DXP's Open Edition.

The customer's interface for all our DXP Cloud services is the DXP Cloud Portal, this portal is accessible via portal.neptune-software.com (opens new window) to all of Neptune's customers. You could compare it to Azure's Portal, or the AWS Management Console.

This DXP Cloud Portal is developed with Neptune's own DXP Open Edition, at Neptune we're big fan of the dog fooding (opens new window) philosophy. The DXP Cloud Portal talks to the DXP Cloud Infrastructure via a REST API. We run everything, including our DXP Cloud Portal and DXP Cloud Infrastructure on Microsoft's Azure.

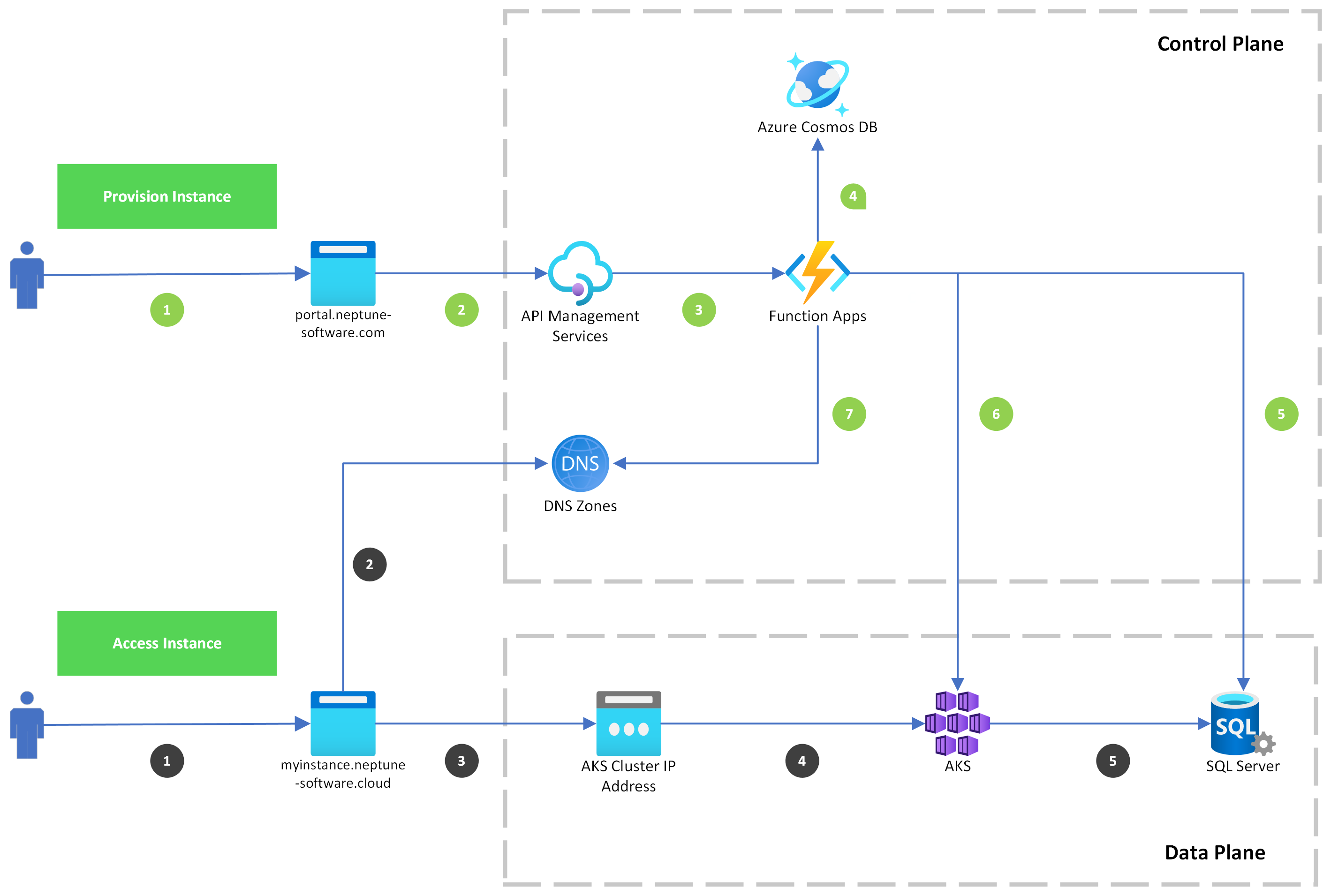

The DXP Cloud Infrastructure exists of 2 main components when it comes down to our DXP Cloud Instances. The Control Plane and the Data Plane, comparable to Kubernetes's control and data plane.

A DXP Cloud instance is a distinct, hosted DXP Open Edition instance. A customer can provision one or more of these instances (e.g. for dev, test, qa, prod, etc...).

The Control Plane is the infrastructure, responsible for orchestrating and controlling DXP Cloud Instances. Like provisioning, scaling, deleting, and monitoring. The Data Plane is the infrastructure where all customer's instances are running.

# Diagram

I've attached a high level architecture diagram. As we're continiously building the DXP Cloud platform, this diagram will get outdated at some point. However, the high level concepts won't change that much.

I'll explain the diagram based on 2 use cases:

- Green: Provision Instance

- The customer accesses DXP Cloud Portal via portal.neptune-software.com (opens new window)

- The customer requests to provisioning an instance, this manifests in an HTTP request send to an Azure API management service (APIM).

- The APIM proxies the HTTP request to a Function App internally. The Function App will orchestrate the provisioning process.

- We persist all the necessary metadata about the instance in an Azure Cosmos DB.

- The Function App provisions an Azure SQL database for given instance, this will be used as the data store for given DXP Open edition instance.

- The Function App provisions a Kubernetes workload for given instance, this will be used as the compute part for given DXP Open Edition instance.

- A DNS Zone CNAME record is created for given instance (e.g.

myinstance.neptune-software.com) which contains the Public IP Address of the target AKS cluster's Ingress endpoint. Making the instance available to the public internet.

- Blue: Access Instance

- The customer accesses the DXP Cloud Instance via it's dedicated url

myinstance.neptune-software.com. - The DNS lookup returns the target AKS cluster's Ingress endpoint IP address.

- The browser sends the HTTP request to given IP address.

- On given IP address the AKS Cluster is available, with a reverse proxy in the AKS cluster, this reverse proxy (NGINX Ingress) forwards the request to the target Kubernetes workload.

- The Kubernetes workload receives the HTTP request and uses its distinct MS SQL database when necessary.

- The customer accesses the DXP Cloud Instance via it's dedicated url